If you ranked the key ingredients of high-quality video experiences, video latency would easily be in the running for a medal. Narrow the competition to live content and there’s no question: low video latency is king.

Broadcast video networks have a long track record of getting low latency right. They use components, workflows and streaming protocols fine-tuned to scale high-quality, low-latency experiences cost-effectively to millions of concurrent viewers.

But reliable and cost-effective low-latency remains a challenge for many newer cloud-based OTT streaming services.

Here’s what video providers launching new OTT streaming services can learn from traditional broadcast video networks to drive cost-effective, high-quality, low-latency experiences at scale.

Why does low-latency video streaming matter?

Live video experiences are a powerful draw for audiences. Whether it’s sports, reality shows, breaking news, network premieres or otherwise, there’s something enduringly powerful in seeing events unfold in real time.

But high latency kills the immersion that makes live content so powerful—seeing a goal 45 seconds after you received an alert about it on social media is enough to drive consumers away from your service for good.

Even for recorded content, consistent buffering issues (that usually manifest as the spinning wheel) are enough to send users packing. And it’s not just the viewing experience that’s under threat. Persistent video latency issues can negate many of the wider benefits of migrating your core video network infrastructure to the cloud.

It doesn’t matter that your new all-IP video network is hypothetically more scalable, efficient, economical, data-rich and so on if you can’t guarantee the fundamentals of consistent low-latency.

So let’s dig into the causes of high latency in video networks to learn how broadcast mitigates them (and how OTT streaming services can do the same).

What causes high latency?

Put simply, high video latency is caused by delays in the delivery path—stemming from congestion or missing data as content travels from its source to its destination. The problem is that the open, unmanaged internet was never designed to deliver high-quality, low-latency live content at scale (and especially not cost-effectively).

Video providers need to build a managed video network pipeline in which every step within the streaming workflow is optimised for low-latency—across the encoder, the packager, the CDN and the player.

What’s the solution to high latency?

Let’s start with the encoder and the packager.

Traditional Adaptive Bitrate Streaming (ABR) protocols used to deliver video over IP networks typically prioritise reliability (i.e. continuity of service) over the performance needed for low latency.

ABR is a complex topic (and you can read more about it here) but here’s what the basic flow looks like:

Traditional ABR encoders and packagers receive data from the live video source and break it down into six-second segments. At the other end, ABR clients download and buffer those segments from Content Distribution Networks. The result is that ABR clients can’t display any content to viewers if they don’t have at least one (and often up the three) segments buffered. That means they’re highly susceptible to congestion and bandwidth issues.

The solution is to break down content into smaller chunks using Chunked Transfer Encoding for standards like DASH, Apple Low Latency HLS and new protocols like HESP.

The requirements of efficient low latency

Bandwidth efficiency is critical to low latency streaming over all-IP infrastructure—not only to reduce congestion on the network but also to maximise cost efficiency. And that means video providers also need to optimise the end of the video network pipeline—at the CDN and ABR client.

To economise the volume of data moving in and out of the CDN (and therefore the costs), broadcasters used a process called statistical multiplexing—the practice of automatically consolidating multiple video sources into a single bandwidth-efficient channel based on frame-by-frame image complexity.

Video providers with OTT streaming services can achieve similar results using Smart Rate Control. This is similar to statistical multiplexing in that it uses variable bitrate (rather than constant bit rate) to dynamically match bandwidth to frame-by-frame image complexity.

The second part of the efficiency challenge is to scale more streams to more clients without incurring more costs. Traditional ABR encoding uses unicast delivery—a 1:1 relationship between streams and viewers.

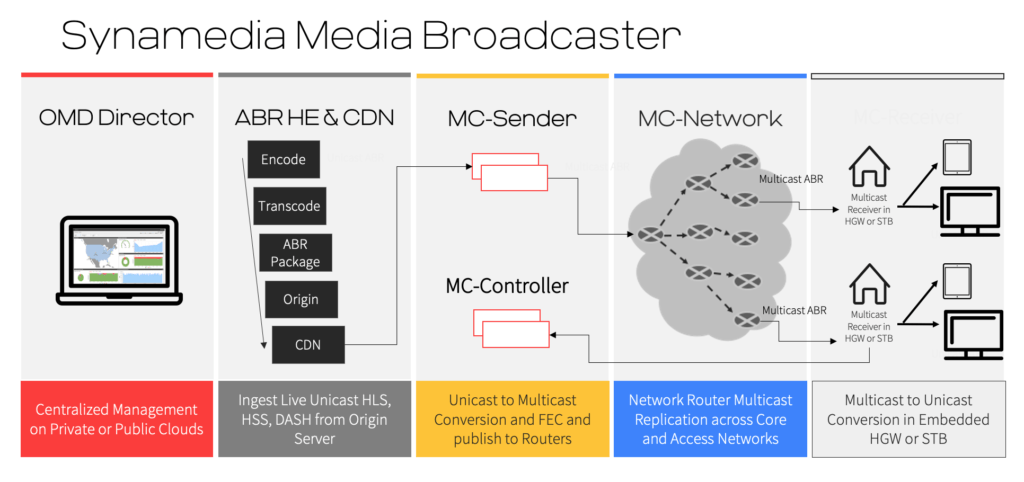

Multicast-ABR economises the video traffic sent between a single CDN and multiple subscribers.

Multicast senders, networks and receivers enable a one-to-many relationship between source video content and multiple subscribers. At the end of the chain, the multicast receiver reconstitutes a single video feed into multiple unicast feeds for set top boxes and home gateways.

Low-cost low-latency for OTT video streaming

Guaranteed low latency is the last hurdle for video providers moving away from traditional broadcast infrastructure and moving towards the all-IP future. With the right video network, it’s more than possible to match broadcast’s performance while also benefiting from everything the cloud has to offer.

(And that’s exactly what we’re delivering for Yes as they phase out their DTH satellite service for an all-IP video network).

The key is a multi-stage, fully-managed IP video network that doesn’t rely on the open, unmanaged internet to deliver content from source to client. Here’s what the pipeline looks like:

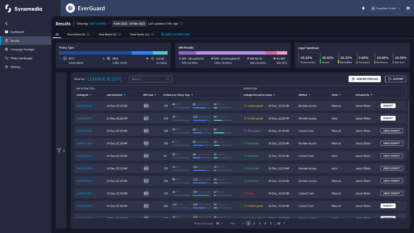

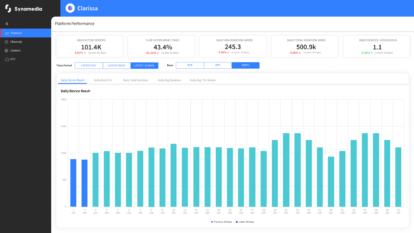

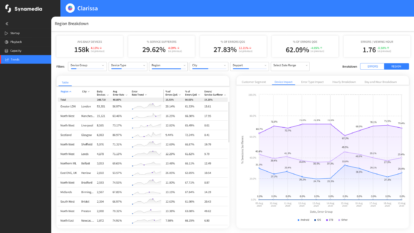

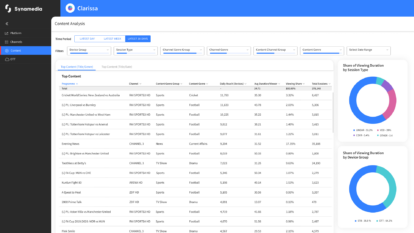

To find out more about how we’re helping video providers like you make the leap, take a look at our low latency demo on the Synamedia Digital Showcase.

About the Author

Bart is responsible for marketing Synamedia’s video network product and architecture portfolio, covering cloud, workflow automation, ABR technology and microservices. With over 20 years’ experience in the video industry, Bart demonstrates how Synamedia’s solutions and services help service providers to deliver premium video services securely and reliably to any screen.

Prior to joining Synamedia, Bart spent 12 years with Cisco, and before that was at Scientific-Atlanta, which was subsequently acquired by Cisco. Before that he held various engineering and product management roles.

Bart holds a Master of Science in Applied Engineering from the KaHo Sint-Lieven, currently University of Leuven, in Belgium.